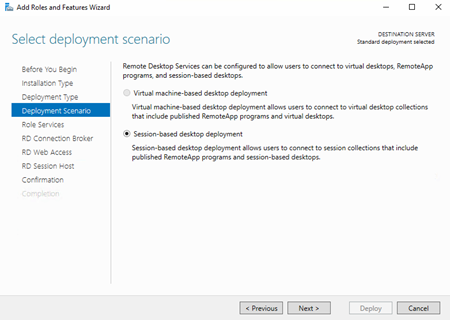

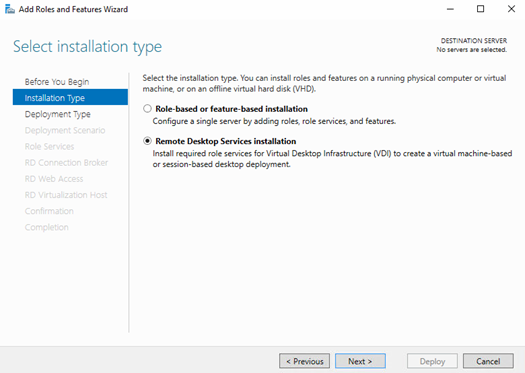

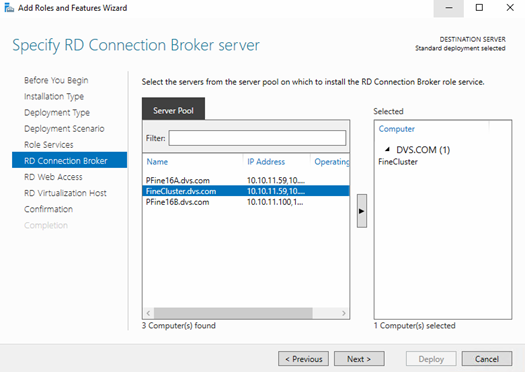

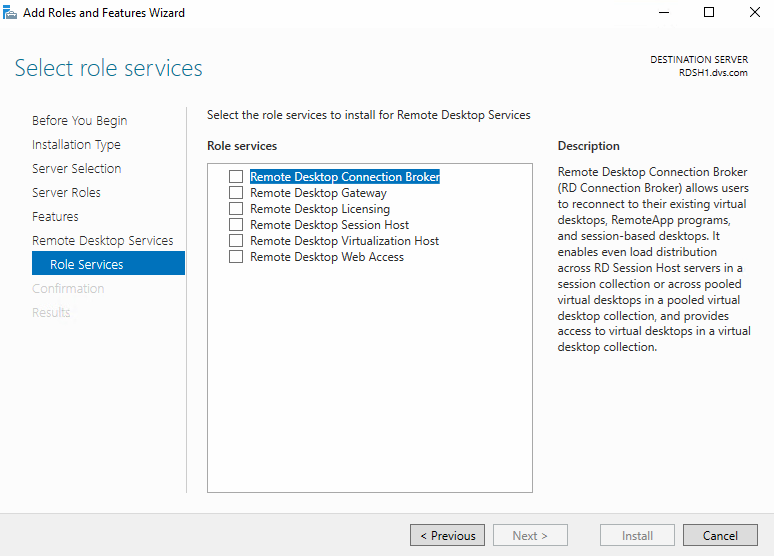

I first explored this topic several years ago for Server 2012 which remained applicable through the R2 lifecycle. Now with Server 2016 RDS alive and well, let’s see if anything has changed. What was true then is also true now and way back before when this was known as Terminal Services: a host’s physical or virtual resources are shared to several simultaneously connected users, each of which executing applications installed locally on the server. Remote Desktop Session Host, or RDSH, is what we call “session based” virtualization meaning that each user is essentially provided apps or a full desktop experience, but each user is simply a remote session, all existing within a single Windows Server OS. An RDSH host can be physical or virtual, virtual being more common now as you get the additional benefits of virtualization including clustering and HA. This is in stark contrast to desktop virtualization, aka VDI, in which each user is assigned a pooled or persistent desktop VM that has dedicated hardware resources provisioned from a server hypervisor. RDSH works great for application virtualization the likes of office and web apps. In short, RDSH = a single Server OS that users connect to, all CPU and RAM shared amongst all. Desktop virtualization = several desktop VMs each with their own assigned CPU and RAM.

What comes with Windows Server?

Starting in Server 2008R2, a feature called Dynamic Fair Share Scheduler was introduced which aimed to proactively and dynamically distribute CPU time based on the number of active sessions and load on each. After all, CPU is the resource consistently hit hardest in this use case. Additional drivers were added in Server 2012 to fairly share network and disk utilization, but not memory. This all remains true in Server 2016 with no apparent changes to the base mechanisms. Ultimately if memory sharing between user sessions in RDSH presents a challenge for you, based on user or application behavior, adding third-party tools or Hyper-V + desktop VMs may be a better option. That said, since your RDSH environment will likely be virtual, these elements are controlled on a per RDSH server VM basis. So balancing user load between these RDSH instances is the more important concern as no single server instance will be allowed to overwhelm the host server.

The CPU scheduler can be controlled via group policy, which is enabled by default, but not disk or network, also enabled by default.

This GPO element toggles the switch found in the registry at \HKLM\System\CurrentControlSet\Control\Session Manager\Quota system:

The other Fair Share components, can be found in the registry as well in a different location, each provide a binary on/off switch.

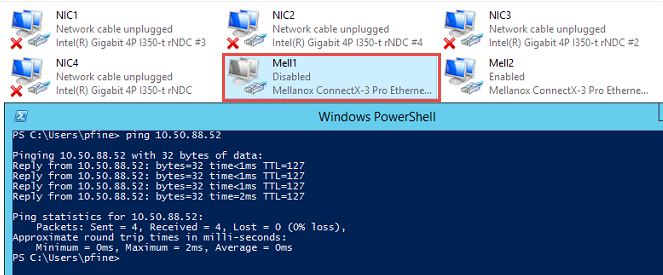

These elements can also be controlled via PowerShell through the command (gwmi win32_terminalservicesetting -N “root\cimv2\terminalservices”). Here I have DFSS disabled but disk and network enabled. Not sure why network shows no value here:

Test Scenario: CPU Fair Share

The output below was generated using the following configuration. Please note this does not represent an optimal or recommended RDSH configuration, it is intentionally low spec to easily showcase this scenario:

- Host: Dell EMC PowerEdge R630

- Hypervisor: Server 2016 Hyper-V Datacenter

- RDSH: 1 x Server 2016 VMs configured with 1 vCPU/ 4GB RAM

- Users: 3 total sessions represented by RDP-TCP, ICA-CGP/ pfine/b/c in the graph

- Workload: Prime95 blend option

- Monitoring: Perfmon captures from RDSH1 VM

This run demonstrates three user sessions executing Prime95, I spaced launches a few seconds apart, ending with all three competing for maximum CPU. As you can see, DFSS does its thing to limit CPU, 50% at two users, 33% once all three are active.

What about Citrix?

Citrix offers a number of products and services famously targeted at the EUC space, most notably XenApp and XenDesktop. Speaking of RDSH and user session/ application virtualization specifically, XenApp essentially encompasses the connection of your RDSH VMs running the Citrix VDA (Virtual Delivery Agent) to the Citrix management infrastructure. “XenApp” is the larger holistic solution which includes connection brokering, policies and management. For app and session delivery alone, you still need the RDSH role installed on Windows Server but run the VDA in addition on each instance. Previously resource management was enabled via policies in Studio but this has since migrated to Citrix Workspace Environment Management (WEM), which is the result of the recent Norskale acquisition and now represents the primary Citrix UEM tool. WEM does a whole lot more than resource management for RDSH, but for the context of this post, that’s where we’ll focus. WEM is available to XenApp & XenDesktop Enterprise or Platinum customers which you can read more about here.

WEM requires a number of components on its own, including Infrastructure Services (SQL DB, SyncFx), an agent for each RDSH instance and a management console. WEM includes a utility to create the database, another console for the infrastructure service configuration and finally another console to manage WEM as applied to the XenApp infrastructure. Once everything is created, talking and operational, WEM will require access to a valid Citrix license server before you can use it. Needless to say, WEM is usable only within a Citrix infrastructure.

To get started, make sure your agents are talking to your broker, which is set via GPO on the computer objects of your RDSH VMs. The requisite ADMX file is included with the installation media. Verify in the WEM management console under the Administration tab: Agents. If you don’t see your RDSH instances here, WEM is not working properly.

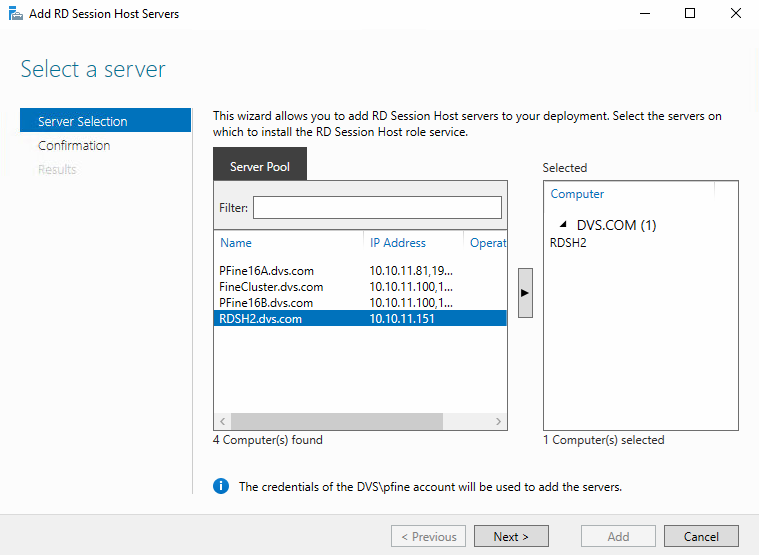

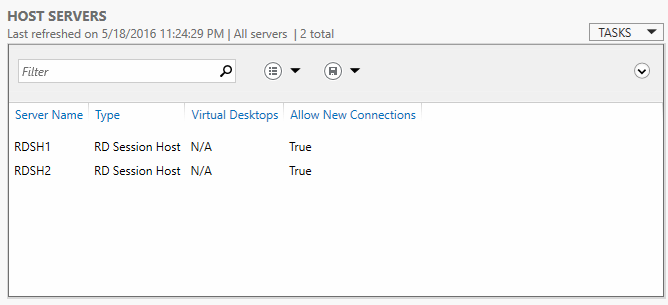

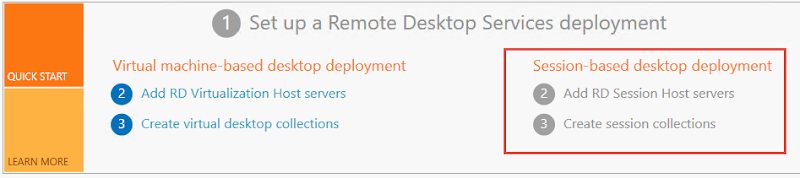

WEM provides a number of ways to control CPU, memory and IO utilization controlled by configuration sets. Servers are placed into configuration sets which tells the installed WEM agents which policies to apply, an agent can only belong to one set. If you want a certain policy to apply to XenApp hosts and another to apply for XenDesktop hosts, then you would create a configuration set for each housing the applicable policies. For this example, I created a configuration set for my RDSH VMs which will focus on resource controls.

The System Optimization tab houses the resource control options, starting with CPU, provides high level to more granular controls. At a very basic level, CPU spike protection is intended to ensure that no process, unless excluded, will exceed the % limits you specify. Intelligent CPU and IO optimization, highlighted below, causes the agent to keep a list of processes that trigger spike protection. Repeat offenders are assigned a lower CPU priority at process launch. That is the key part of what this solution does: offending processes will have their base priority adjusted so other process can use CPU.

To show this in action, I ran Prime95 in one of my XenApp sessions. As you can see below the Prime95 process is running with a normal base priority and happily taking 100% of available CPU.

Once CPU Spike protection is enabled, notice the prime95 process has been changed to a Low priority but it isn’t actually limited to 25% according to my settings. What this appears to do in reality, is make problem processes less important so that others can take CPU time if needed, but no hard limit seems to be actually enforced. I tried a number of scenarios here including process clamping, varying %, optimized vs non, all in attempts to force Prime95 to a specific % of CPU utilization. I was unsuccessful. CPU utilization never drops, other processes are allowed to execute and share the CPU, but this would happen normally with DFSS.

I also tried using the CPU Eater process Citrix uses in their videos thinking maybe Prime95 was just problematic, but if you notice even in their videos, it does not appear that the policy ever really limits CPU utilization. The base priority definitely changes so that part works, but “spike optimization” does not appear to work at all. I’d be happy to be proven wrong on this if anyone has this working correctly.

For memory optimization, WEM offers “working set optimization” which will reduce the memory working set of particular processes once the specified idle time has elapsed. Following the Citrix recommendation, I set the following policy of 5 minutes/ 5%.

To test this, I opened a PDF in Chrome and waited for optimization to kick in. It works! The first image shows the Chrome process with the PDF first opened, the second image shows the process after optimization has worked its magic. This is a 99% working set memory reduction, which is pretty cool.

The IO Management feature allows the priorities of specific processes to be adjusted as desired, but not specifically limited by IO.

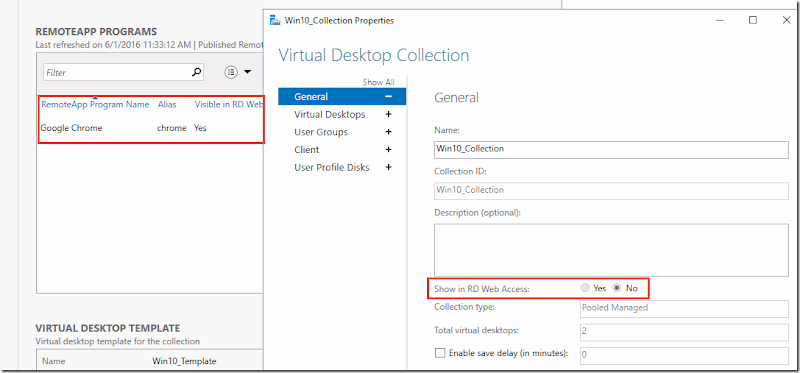

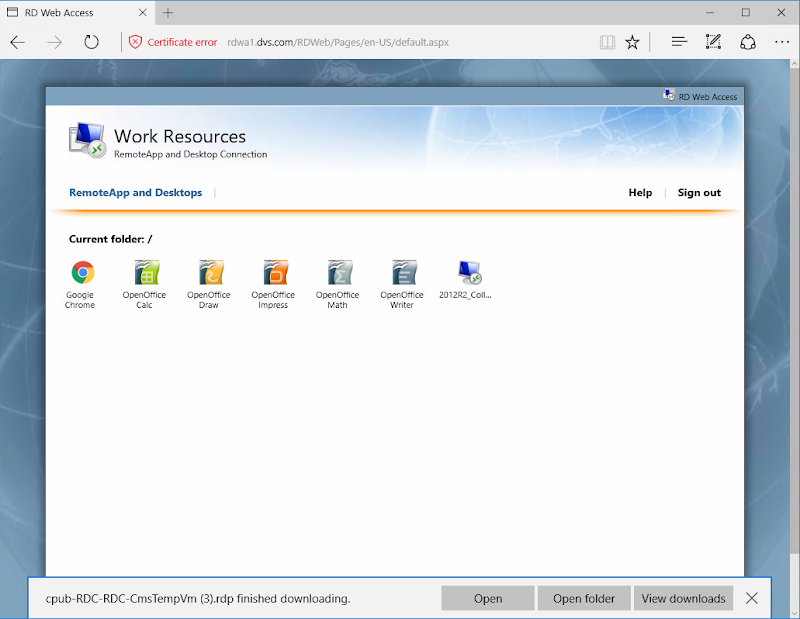

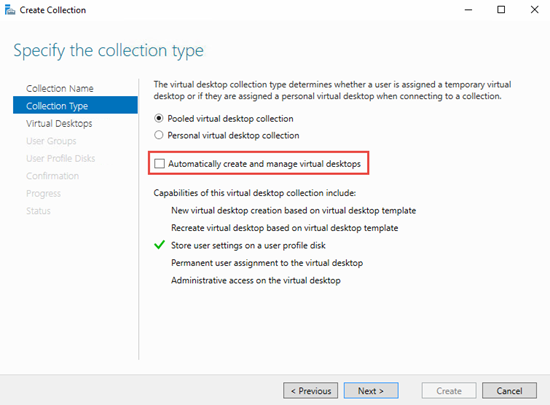

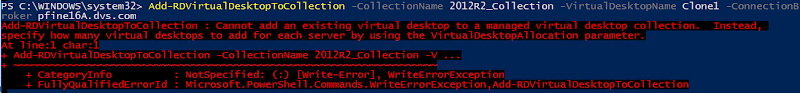

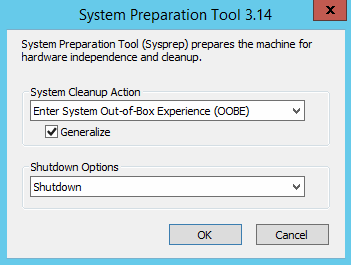

What about Citrix XenApp in Azure?

XenApp can also be run in Microsoft Azure via the Citrix XenApp Essentials offer. After the requisite Azure and Citrix Cloud subscriptions are established, the necessary XenApp infrastructure is easily created from the Compute catalog directly in Azure. The minimum number of users is 25 but your existing RDS CALs can be reused and Citrix billing is consolidated on your Azure bill for ease. Azure provides a number of global methods to create, scale, automate and monitor your environment. But at the end of the day, we are still dealing with Windows Server VMs, RDSH and Citrix Software. All the methods and rules discussed previously still apply, with one important exclusion: Citrix WEM is not available in Azure/ Citrix Cloud, so DFSS is your best bet unless you turn to supported third-party softs.

Once the XenApp infrastructure is deployed within Azure, the Citrix Cloud portal is then used to manage catalogs, resource publishing and entitlements.

What about VMware?

VMware also provides a number of methods to deploy and manage applications, namely: ThinApp, AirWatch and App Volumes while providing full integration support for Microsoft RDSH via Horizon or Horizon Apps. For those keeping count, that’s FOUR distinct methods to deploy applications within Horizon. Similar to Citrix, VMware has their own UEM tool called… VMware UEM. But unlike Citrix, the VMware UEM tool can only be used for user and application management, not resource management.

While Horizon doesn’t provide any RDSH resource-optimizing capabilities directly, there are a few settings to help reduce resource intensive activities such as Adobe Flash throttling which will become less compelling as the world slowly rids itself of Flash entirely.

Something else VMware uses on RDSH hosts within a Horizon environment is memory and CPU loading scripts. These VBscripts live within C:\Program Files\VMware\VMware View\Agent\scripts and are used to generate Load Preference values that get communicated back to the Connection Servers. The reported value is then used to determine which RDSH hosts will be used for new session connections. If CPU or memory utilization is already high on a given RDSH host, VCS will send a new connection to a host with a lower reported load.

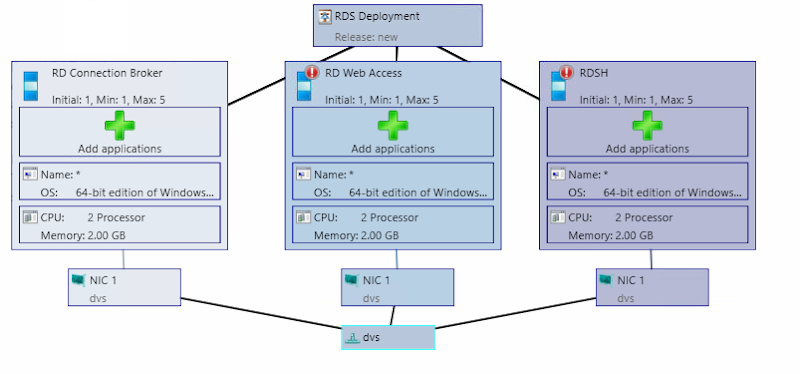

What about VMware Horizon in Azure?

VMware Horizon is also available in Azure via Horizon Cloud and has a similar basic architecture to the Citrix offer. Horizon Cloud acts as the control plane and provides the ability to automate the creation and management of resources within Azure. VMware has gone out of their way to make this as seamless as possible, providing the ability to integrate multi-cloud and local deployments all within the Horizon Cloud control plane, thus minimizing your need to touch external cloud services. From within this construct you can deploy applications, RDSH sessions or desktop VMs.

Horizon Cloud, once linked with Azure, provides full customized deployment options for RDSH farms that support delivery of applications or full session desktops. Notice that when creating a new farm, you are provided an option to limit the number of sessions per RDSH server. This is about it from a resource control perspective for this offer.

What about third party options?

Ivanti has become the primary consolidation point for predominant EUC-related tooling, most recently and namely, RES Software and AppSense. The Ivanti Performance Manager (IPM) tool (formerly AppSense Performance Manager) appears to be the most robust resource manager on the market today and has been for a very long time. IPM can be used not only for granular CPU/ memory throttling on RDSH but any platform including physical PCs or VDI desktops. I didn’t have access to IPM to demo here, so the images below are included from Ivanti.com for reference.

IPM provides a number of controls for CPU, memory and disk that can be applied to applications or groups, including those that spawn multiple chained processes with a number of options to dial in the desired end state. Performance Manager has been a favorite for RDSH and XenApp environments for the better part of a decade and for good reason it seems.

Resources

Citrix WEM product documentation: https://docs.citrix.com/en-us/workspace-environment-management/current-release.html

A year of field experience with Citrix WEM: https://youtu.be/tFoacrvKOw8

Citrix XenApp Essentials start to finish: https://youtu.be/5R-yZcDJztc

Horizon Cloud & Azure: https://youtu.be/fuyzBuzNWnQ

Horizon Cloud Requirements: http://www.vmware.com/info?id=1434

Ivanti Performance Manager product guide: https://help.ivanti.com/ap/help/en_US/pm/10.1/Ivanti%20Performance%20Manager%2010.1%20FR4%20Product%20Guide.pdf

![image_thumb[3] image_thumb[3]](https://lh3.googleusercontent.com/-C22pzG0s0_c/WOgANobWEiI/AAAAAAAAP8o/TKjI3ce7xwU/image_thumb3%25255B1%25255D.png?imgmax=800)

![image_thumb[4] image_thumb[4]](https://lh3.googleusercontent.com/-TuQ4uZ3fLnk/WOgAOnBD5tI/AAAAAAAAP8s/KytmcLurOzY/image_thumb4%25255B1%25255D.png?imgmax=800)

![image_thumb[5] image_thumb[5]](https://lh3.googleusercontent.com/-28QnsVSyRa8/WOgAPZdHPyI/AAAAAAAAP8w/g2pBGkr4y7A/image_thumb5%25255B1%25255D.png?imgmax=800)

![image_thumb[6] image_thumb[6]](https://lh3.googleusercontent.com/-ifa1JyfHK1o/WOgAQB0EhlI/AAAAAAAAP80/2qFDUlxNO_I/image_thumb6%25255B1%25255D.png?imgmax=800)

![image_thumb[8] image_thumb[8]](https://lh3.googleusercontent.com/-XmjdbyYgc88/WOgAQ4-hpgI/AAAAAAAAP84/llX_HHevZdc/image_thumb8%25255B1%25255D.png?imgmax=800)

![image_thumb[1]](https://lh3.googleusercontent.com/-CFhZjiWn1Cs/WOf-WZFYd2I/AAAAAAAAP8Y/I95Rtf0SqBo/image_thumb11%25255B1%25255D.png?imgmax=800)

![image[27] image[27]](https://lh3.googleusercontent.com/-Oq1wjw4dZJs/V0MbMo00_gI/AAAAAAAAKIE/LIL5yFMUFo8/image27_thumb.png?imgmax=800)

![image[27] image[27]](https://lh3.googleusercontent.com/-iyUkLk6AFVw/V0Ma5tWmHXI/AAAAAAAAKHI/wS4MR0NAMQY/image27_thumb.png?imgmax=800)

![image[20] image[20]](https://lh3.googleusercontent.com/-Y7E9r0yyY9A/V09YyfmttaI/AAAAAAAAKaM/Q5SjTOgfZ9Q/image20_thumb%25255B1%25255D.png?imgmax=800)

![image[2] image[2]](https://lh3.googleusercontent.com/-rl9ko6wVaG4/V09YzwL9GKI/AAAAAAAAKaU/14-Zru4JoxE/image2_thumb%25255B1%25255D.png?imgmax=800)

![image[17] image[17]](https://lh3.googleusercontent.com/-Rm41V0N7xi8/V0-wj0pC6QI/AAAAAAAAKbs/INGB04eAngA/image17_thumb%25255B1%25255D.png?imgmax=800)