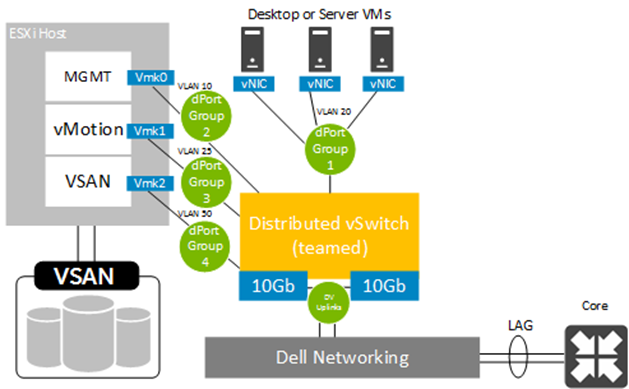

Part 1 – Architecture, Prep & Cluster Deployment

Part 2 – vCenter Deployment and Configuration

Part 3 – Network Configuration

Part 4 – Troubleshooting: things that can go wrong (you are here)

I love technology and HCI is awesome but things that can still go wrong. Here are a few items that you hopefully won’t run into, but if you do here’s how to deal with them. I broke and rebuilt this environment a couple of times to see where some of the pitfalls are.

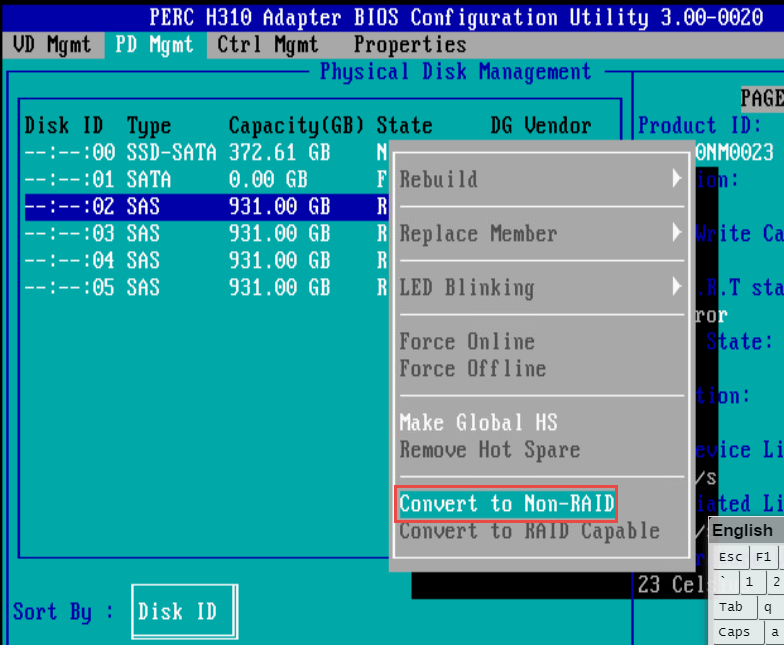

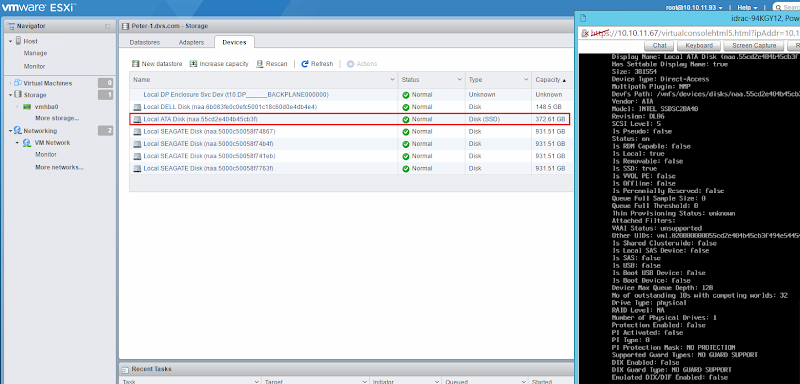

Disk Group Trouble

I had a small bit of disk weirdness with my original host reporting that one of my disk groups was unhealthy with 6 absent disks, immediately following the build of my HA cluster. Interestingly, the SSD in this DG was recognized and online but all the other disks were phantoms created somehow when I moved this host into the cluster. The two real HDDs were unassigned and waiting idly (disks in use 10 of 12). Notice the second disk group is healthy and really underscores the value of running at least two DGs per host which I highly recommend as a minimum. Don’t allow a disk or DG failure to generate a node failure event! The neat thing about VSAN is that you can add multiple disk groups which will increase capacity, increase performance and spread your failure domain wider.

This should hopefully be a rare occurrence but to resolve, I first vMotion’d my VCSA to another node. Enable vMotion on your existing vmk or create another, if you haven’t already. Then select an absent disk and remove it from the cluster. At this point I had no data to migrate so had no need for evacuation.

Once I removed the last absent disk, vSphere removed the disk group which now only had a single SSD. Start the Create Disk Group dialog and add the second DG with all appropriate disks now accounted for.

Now VSAN is happy with all DGs healthy on all hosts and I had zero service disruption to make this adjustment.

I rebuilt this environment to try and recreate this problem but did not see this issue the second time through.

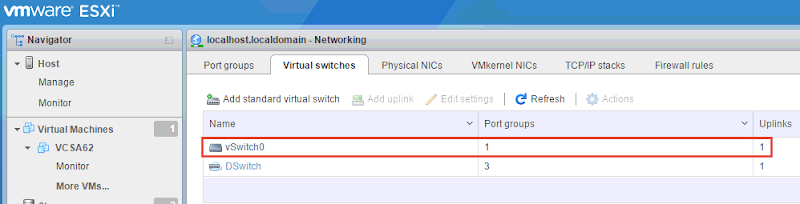

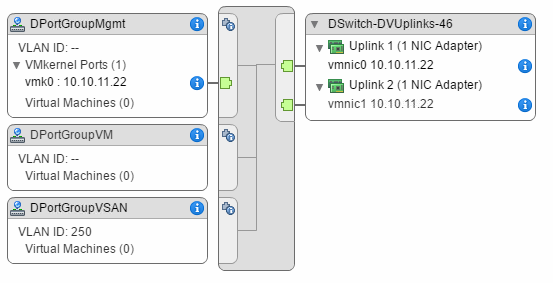

VCSA Stuck on VSS after vDS Migration

Attempting a migration of hosts + VCSA to vDS at the same time left VCSA stuck on VSS with no uplinks. I tried this one just to see if it would work, it didn’t. The VCSA migration method I laid out in part 2 of this series is the way to go. In this scenario the VCSA stayed on the local VSS because it had a vNIC attached to the local port group. Below you can see that the VSS no longer had any vmnics which of course took down vCenter.

To remedy this you need to first manually remove a vmnic from the new vDS on the VCSA’s host. First identify the port ID of the vmnic you would like to move.

esxcfg-vswitch -l

Remove that vmnic from the vDS.

esxcfg-vswitch -Q vmnic -V 17 dvSwitch

This vmnic should now be unassigned from the vDS and assignable to the local VSS. The vmnic can be claimed via the web host client or the desktop client.

Before the VSS would pass traffic I had to remove the vmnic and then add it back to the host (yep, 2 times). Now your VSS should have an uplink again which means vCenter should be accessible.

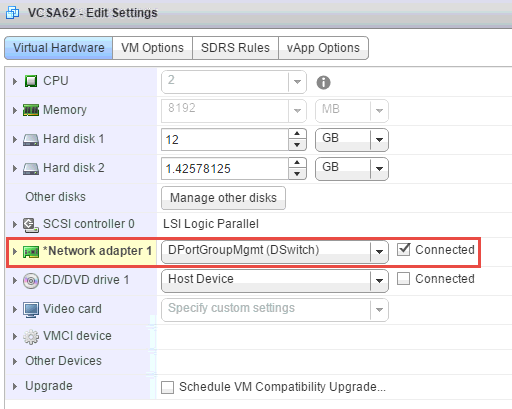

Change the vCenter vNIC to connect to a DPortGroup on the new vDS. Once this is complete you can safely migrate the host’s vmnic back over to the vDS again.

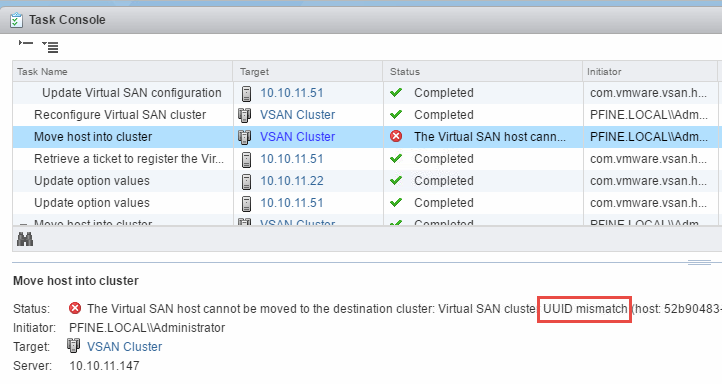

Storage Providers out of Sync

If you run into an issue where you are unable to move a host into the VSAN cluster, your storage providers may be out of sync. This is indicated by a UUID mismatch error and failed node move operation.

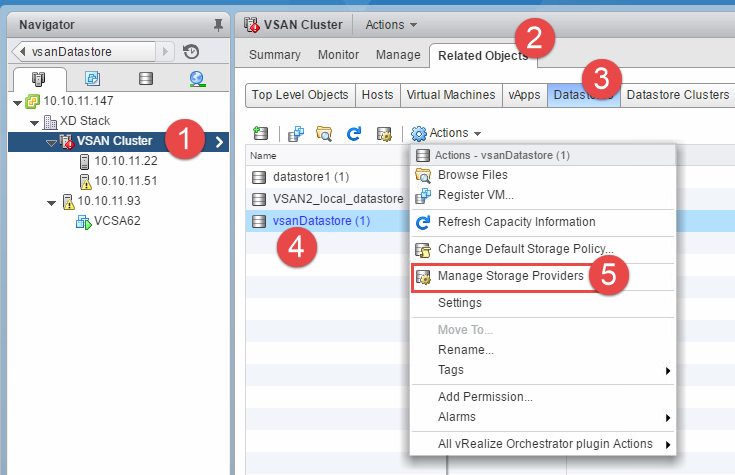

To resolve, from the HA cluster object click Related Objects, Datastores, then right click the vsanDatastore and select Manage Storage Providers.

Select the icon with the red circular arrows to get all nodes in sync and retry your node add operation:

Restoring ESXi Hosts so you can Rebuild VSAN

Things went sideways for you for whatever reason and you need to go back to square 1.

You can do this (a) the careful way or (b) the launch the nukes way

(a) remove a vmnic from the host using the esxcfg-vswitch command, create a new VSS, add the vmnic, add the VMK to the VSS with a good IP. Repeat on each host.

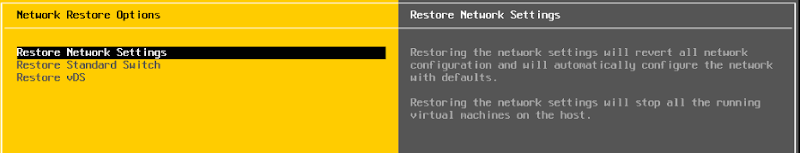

(b) just delete the vDS which will destroy all your networking, VMKs included and will shut down all VMs. Log into iDRAC, launch the console, F2 for settings, Network Restore Options, Restore Network Settings. This will rebuild your VSS and put your mgmt VMK there with the last known IP config. Overall this way will get you on the path quicker.

Delete the VCSA VM and vsanDatastore, start over.

Part 1 – Architecture, Prep & Cluster Deployment

Part 2 – vCenter Deployment and Configuration

Part 3 – Network Configuration

Part 4 – Troubleshooting: things that can go wrong (you are here)

Resources:

Bootstrap vCenter for VSAN 5.5 (Virtually Ghetto)

Enable SSD option on disks not detected as SSDs

![image[64] image[64]](https://lh3.googleusercontent.com/-YlZCcDogq2w/V_RubCP2e3I/AAAAAAAANFs/_PtGnvk81BM/image%25255B64%25255D_thumb.png?imgmax=800)