Part 1: The Basics

Part 2: RDVH

Part 3: RDSH

Part 4: Scaling and HA (you are here)

Most environments that I’ve run across using native RDS for VDI (RDVH) tend to be fairly small, <500 seats but I have seen some larger footprints built around RDSH. The single largest problem for the native RDS solution is management of the environment. This tends to get pretty unwieldy from a management perspective around the 500 user mark using native tools. PowerShell can and should be used in larger environments. The RD Connection Broker (RDCB) itself is capable of 10K concurrent connections so clearly supports scale in and of itself, but it's really unfortunate that the surrounding native management tool stack isn't up to the task and there really isn't much to enable it either. Unidesk can be leveraged to extend the native RDS story (currently Server 2012 R2) providing much better manageability by integrating directly with the RDCB to create desktops and collections. Unidesk provides a good solution that fundamentally alters the deployed architecture using a highly scalable and robust layering model.

The other big consideration with scaling the native RDS stack is MAC address management in Hyper-V. This one is important, especially with compute host densities ever climbing as the semiconductors pump out increasingly core-dense CPUs. By default, Hyper-V supports 256 unique MACs per host. Every Hyper-V host in the world has a 3-octet prefix of 00-15-5D, the next two octets are unique to each host and derived from the IP address assignment, the last octet is auto-generated between 00-FF. The last octet alone is an 8-bit value so represents 256 possible MAC address. You can modify the 4th or 5th octets to increase the pool on a per host basis but be very careful that you don’t accidentally assign an overlapping value. In other words, don’t mess with this unless you really know what you’re doing. Another scenario to avoid is MAC address pool conflicts, which would potentially happen if you deploy a Hyper-V host with a dynamic IP that could be leased to another new Hyper-V server at some point. Very important lesson here is to use static IPs for your Hyper-V hosts.

What about SCVMM?

This question usually comes up in relation to RDS, do you need System Center Virtual Machine Manager (SCVMM), can you use SCVMM, how does it integrate? The Citrix XenDesktop solution requires SCVMM as a component of that architecture for VM provisioning but not so for RDS. In the case of RDS, VMM not only is not required at all but there really isn’t a very direct integration path between the products. SCVMM here should be seen as an external management tool to compliment the base set of tools used to manage your Hyper-V Failover Clusters, storage and VMs. So what can you do with VMM in an RDS deployment?

SCVMM can be used as a basic deployment enabler of the environment or a provisioning/ mgmt tool for unmanaged collections, but does not integrate directly with the RDS farm or the RDCB. This means that SCVMM cannot be used to provision any VM intended to exist within a managed pool owned by the RDCB. You can use SCVMM to create VMs for an unmanaged collection or deploy your RDSH VMs while also benefitted from using a much larger pool to manage assignable MAC addresses without worry of conflict or shortage.

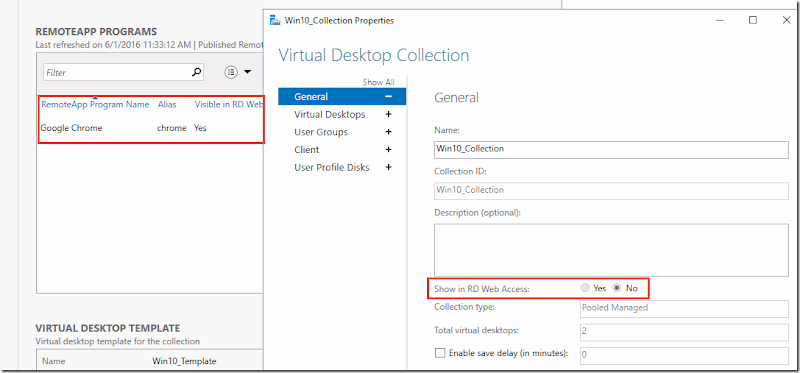

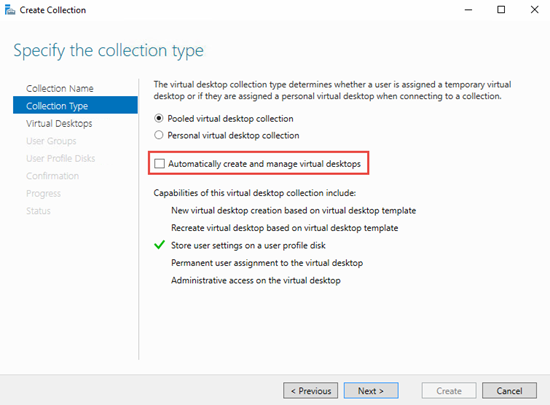

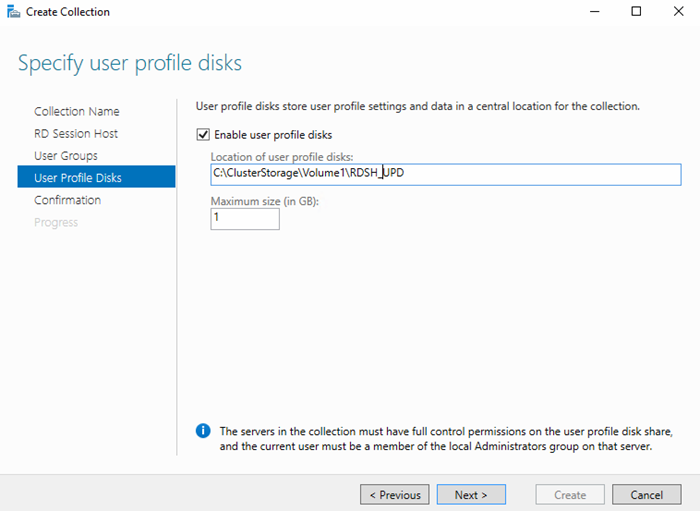

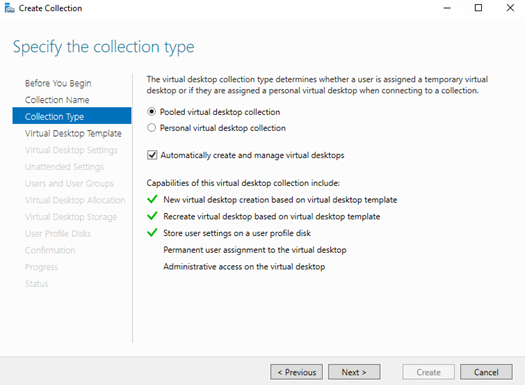

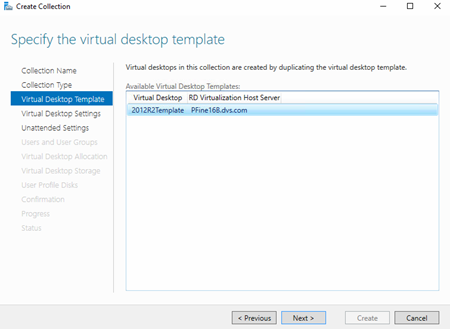

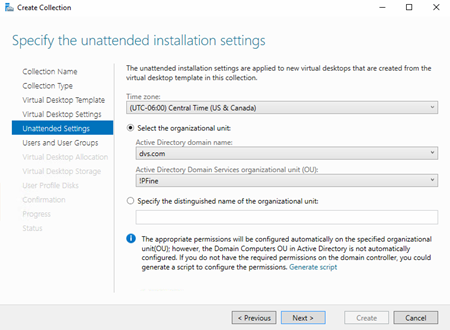

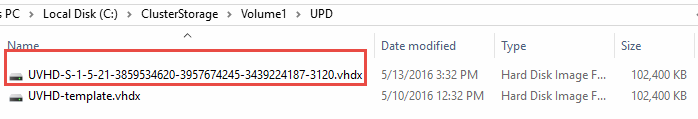

To fully appreciate what is possible here it is important to understand the concept of unmanaged and managed collections in RDS. Managed collections are pools that the RDCB creates and maintains using a template VM, including the ability to recreate VMs as needed. Unmanaged collections are pools to which the RDCB brokers connections, but there is no template VM therefore you have to create and manage the pool manually. Everything I’ve shown so far in this series has been “managed” which is the most common deployment style due to ease of ongoing maintenance. If you want to use SCVMM to manage your desktop pool VMs and take advantage of features like Intelligent Placement and a massive MAC address pool, then you will need to use an unmanaged collection. This model is best suited for a 1:1 persistent desktop deployment and as you can see below, can still make use of UPDs.

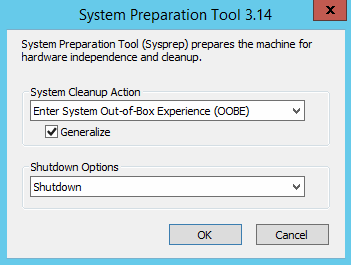

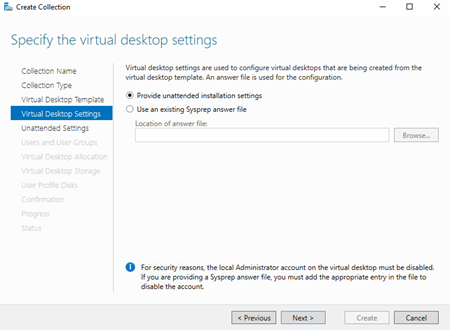

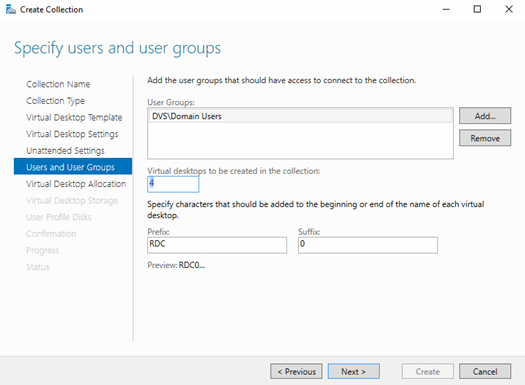

For example, in this deployment I have SCVMM 2016 running SQL Server 2014 on a dedicated Server 2016 VM. I wish to deploy and manage a large RDS pool of persistent desktops using SCVMM. The first step is to create an unmanaged collection. This is specified during the creation of the collection by unchecking the “Automatically create and manage virtual desktops” option. Select any additionally desired options and deploy.

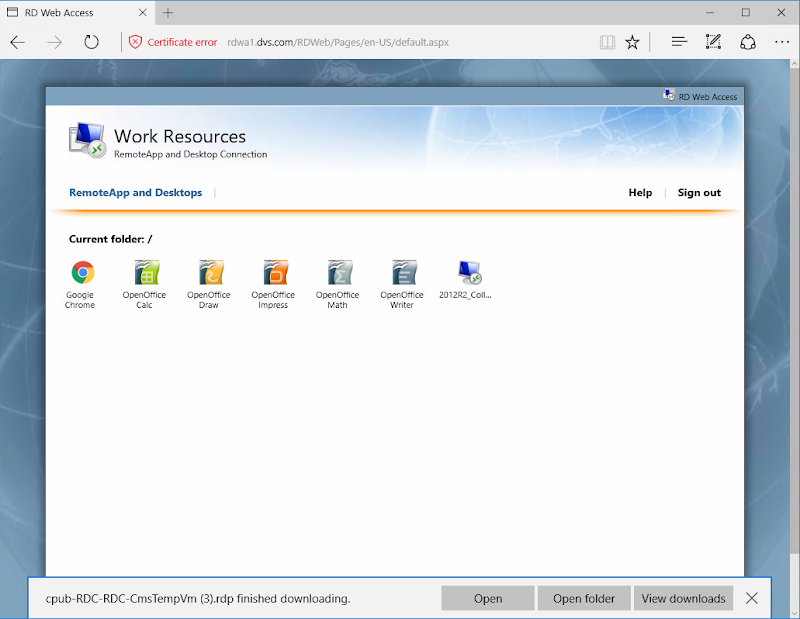

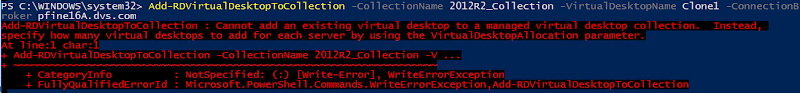

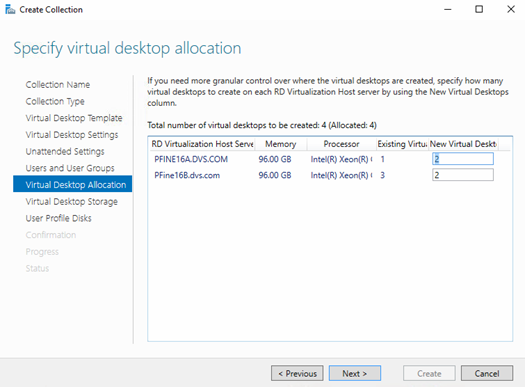

Once the collection is created, clone however many VMs required using SCVMM via PowerShell and spread them across the cluster using SCVMM’s Intelligent Placement feature. There is no way in the SCVMM GUI to clone multiple VMs so this operation is scripted, see Resources at the bottom. This method eliminates the concerns about too few or overlapping MAC addresses and balances the VMs across the cluster automatically based upon available capacity. Once the VMs are created, they then need to be manually added to the new unmanaged collection. This can be done using PowerShell or Server Manager. Once this has been done users will be able to see the collection in RD Web Access and the RDCB will be able to broker user connections to the pool. Thousands of VMs could be deployed this way and brokered using RDCB.

Add-RDVirtualDesktopToCollection -CollectionName Name -VirtualDesktopName Clone1 -ConnectionBroker RDCB.domain.com

Alternatively, VMs can be added to the unmanaged pool using Server Manager.

But wait, can’t I manually add existing VMs to a managed collection too? Nope! You can add additional VMs to a managed collection but they must be based on the template already assigned to the collection thus ensuring consistency.

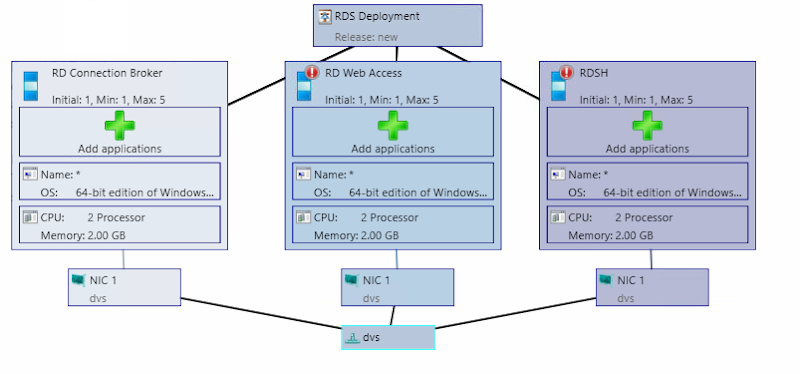

Service Templates

The other use case for SCVMM in the RDS context is for deployment and scaling of the surrounding infrastructure using Service Templates. Within SCVMM, one can create a Service Template to deploy entire or individual pieces of an RDS deployment. The Service Template element within SCVMM provides a visual method to build a master script that is used to provision management server VMs using a specific hardware configuration, with specific applications installed, in a specified order of execution. The possibilities here are nearly limitless as you can have at your disposal the full capability of any application, PowerShell or script. Lock down your Service Templates and you could build, rebuild or expand any deployment with the push of a button.

Scaling for Compute

I’ll talk about HA next which inherently brings scalability to the management stack but first, consider compute as part of the architecture. Compute in this context refers to the physical Hyper-V hosts that provide resources for desktop or RDSH VMs, exclusively. The limitations of the compute layer will almost always be based on CPU. It is the one finitely exhaustible resource not easily expanded unless you upgrade the parts. Adjusting resources to provide additional IO, memory or network throughput is a straight-forward process linearly scalable via the capabilities of the server platform. To get the best bang for the buck, most customers seek to deploy the highest reasonable number of users per compute host possible. Hyper-V provides a great deal of CPU efficiency at the expense of slightly higher IO. Depending on the workload and VM profile, one could expect to see 5-10+ VMs per core in an RDS deployment. Compute hosts used for RDSH VMs will require few total VMs per physical host but have the potential to host a much larger number of total users. NUMA architecture alignment is important to ensure maximum performance in these scenarios. A higher number of cores per CPU is generally more important than the clock speed. Considering that it is easy to achieve 256 VMs on a single compute host (default MAC address limit provided by Hyper-V), the appropriate host hardware mix should be selected to ensure the maximum performance and end user experience. Compute hosts can be added to a deployment in block fashion to satisfy a total desired number of users. Keep in mind the nuances of managing a native RDS stack at scale and whether or not it may make sense to invest in 3rd party solutions to bolster your deployment.

Solution High Availability

High-availability can be configured for this solution in a number of different areas. The general principles of N+1 apply at all service levels including physical components. The following guidance will provide a fully redundant RDS infrastructure:

- Add Top of Rack switching infrastructure for physical port redundancy

- Add Hyper-V compute and mgmt hosts for failover

- Hyper-V hosts configured in a failover cluster to protect physical compute resources also using Cluster Shared Volumes to protect storage (ideally cluster mgmt and compute separately)

- Add load balancers to manage SSL offload and HTTPS connections from clients for RD Gateways and RD Web Access servers

- Add additional RD Gateway and RD Web Access servers to provide resiliency and redundancy

- Add additional RDCB servers configured to connect to a clustered SQL Server instance

- Add a 2nd license server VM configured with temporary licenses, deploy both via GPO but list the primary instance first. Should the primary fail, the secondary will serve the environment using temporary entitlements until the primary is restored.

- Cluster your file server or add a clustered NAS head back-ended by redundant shared storage for UPDs and user data

Here is another look at the larger architecture but within the context of providing HA:

RDCB HA

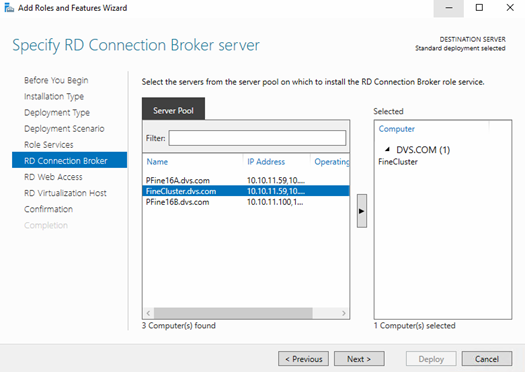

The RD Broker itself is the most single important role that needs special consideration and configuration to make it HA. Configuring HA for the RDCB creates a cluster with a DNS name assigned for load balancing that keeps track of the location and assignments of user sessions and desktops. First, create a new database on your SQL server with the RDCB server configured to have dbcreator permissions.

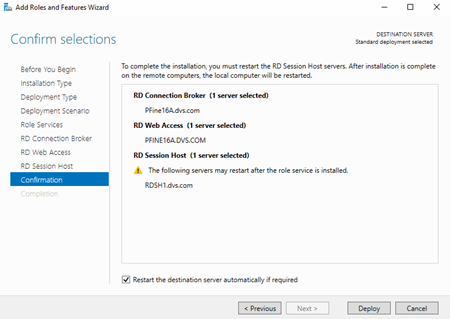

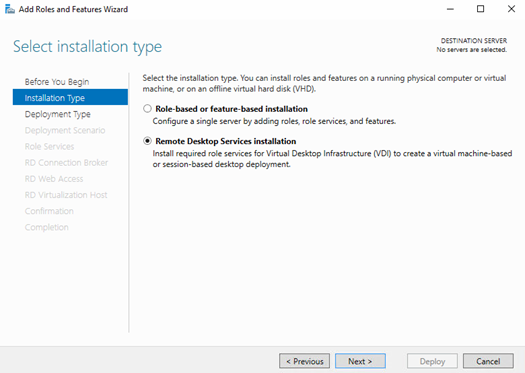

With SQL setup, install the SQL Native Client on all RDCB servers and launch the Configure High Availability wizard from the Deployment Overview.

Choose shared SQL mode, name the clustered RDCB instance and provide the SQL connection string in the following format.

DRIVER=SQL Server Native Client 11.0;SERVER=VMM16.dvs.com; Trusted_Connection=Yes;APP=Remote Desktop Services Connection Broker;DATABASE=RDSQL;

Once successfully completed, the RDCB will show as HA mode in the Deployment Overview and additional brokers can be added using the same dialog.

RDSH HA

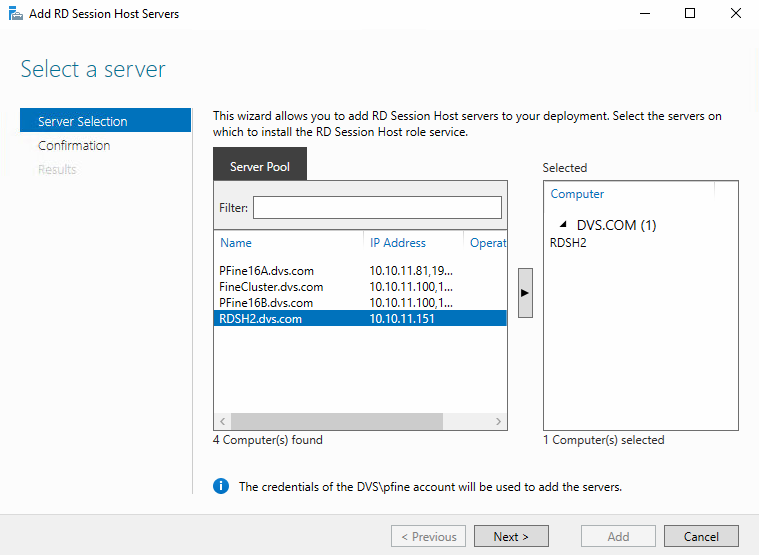

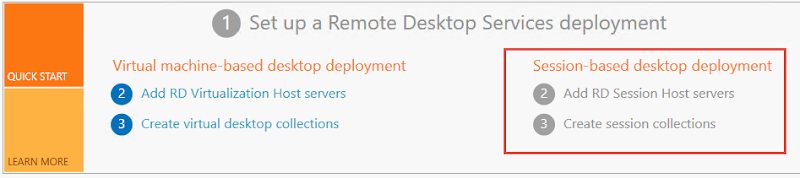

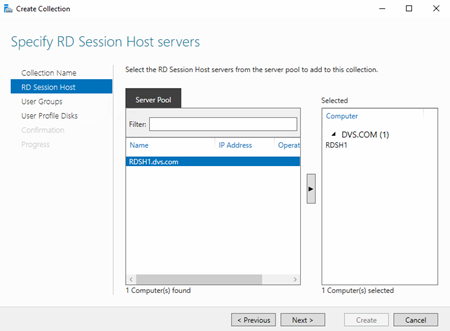

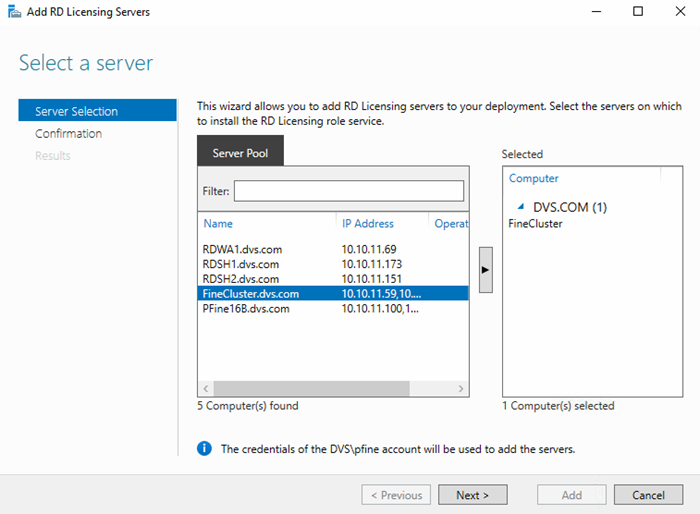

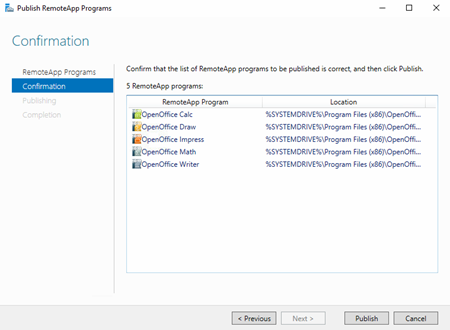

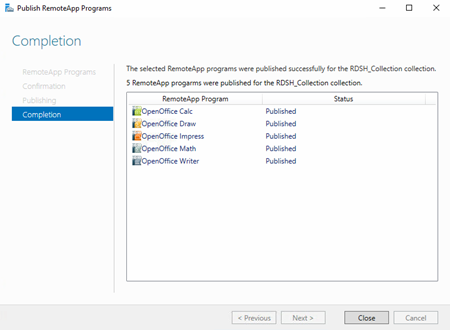

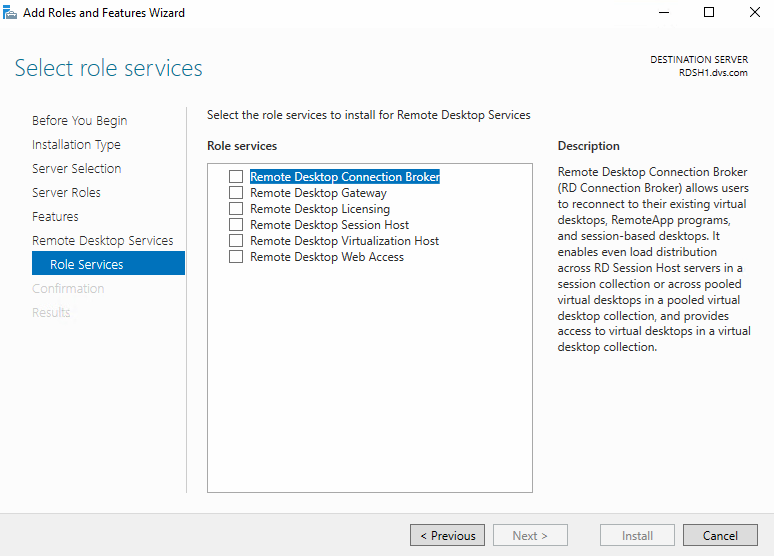

An RDSH collection can be scaled or made HA by adding additional RDSH VMs. Once your new RDSH VMs are created and have the appropriate applications installed, they must be added to the “All Servers” management pool within Server Manager.

Once all hosts or VMs are added to the server management pool, you can add the new VMs to your existing RDS deployment.

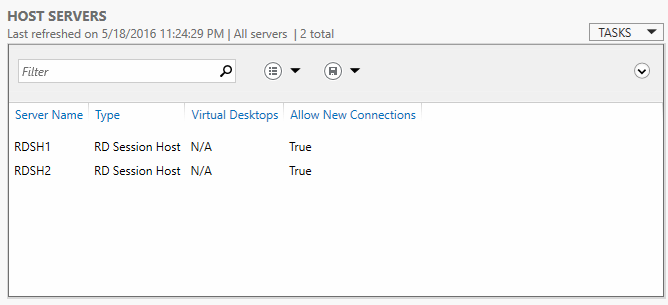

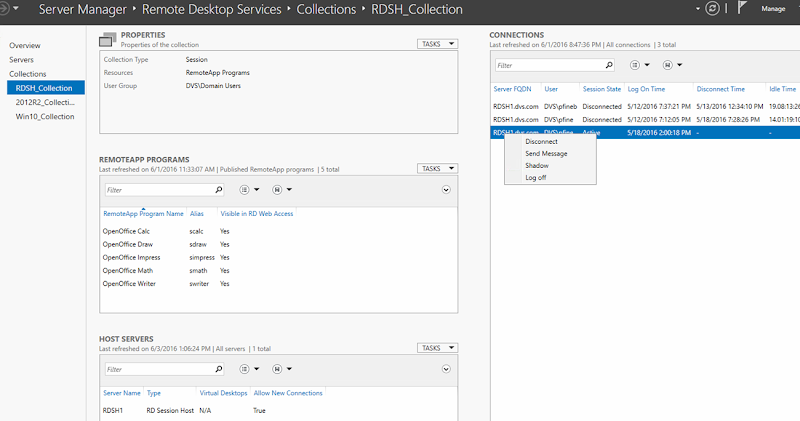

After additional RDSH servers are added to the overall RDS deployment, they can then be added to a specific session collection from the Host Servers dialog of the collection management page.

Once successfully added to the collection, all added server instances will be visible and available to accept connections.

Load balancing between multiple RDSH instances can be configured from within the collection properties dialog to ensure equal distribution or bias, if desired. Making a server “heavier” via relative weight will cause the Connection Broker to push more connections to it accordingly.

RDWA HA

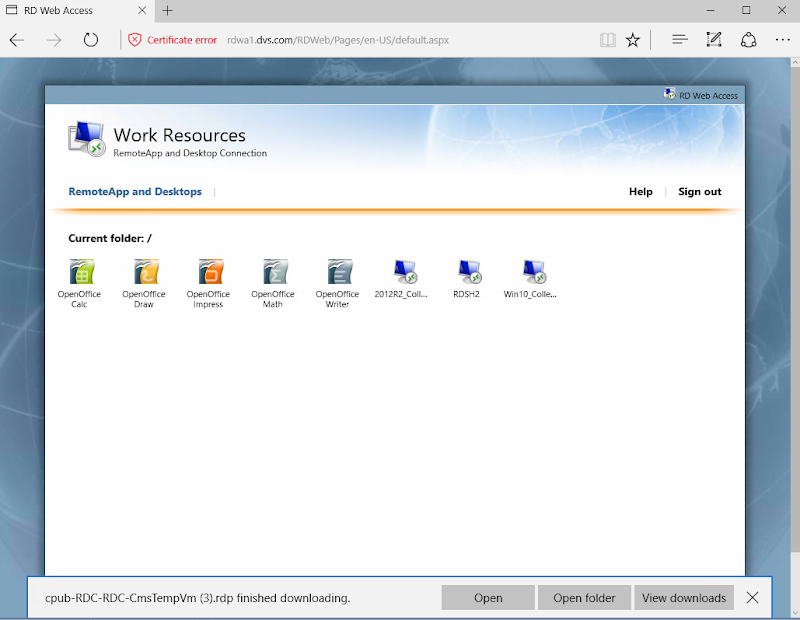

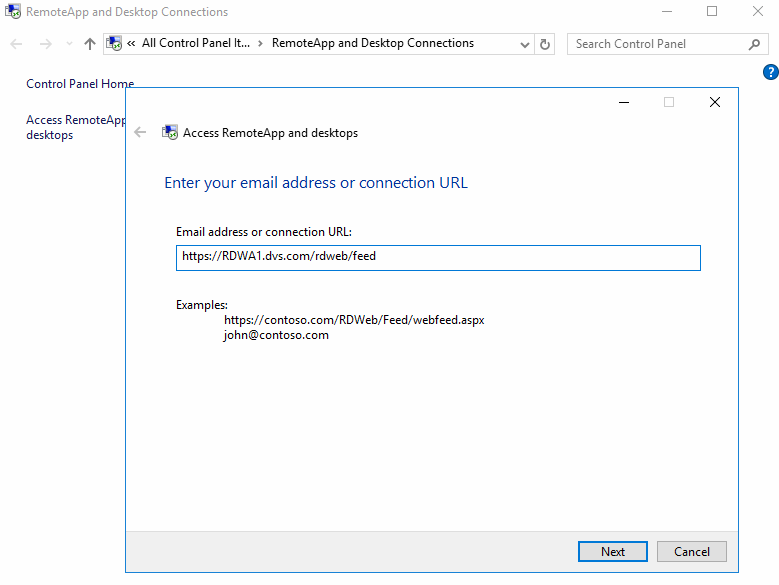

Additional RD Web Access servers can be added at any time from the Deployment Overview or Deployment Servers dialogs. Select the new server instance you wish to add, confirm and deploy. As always, make sure this server instance is added to the “All Servers” management pool. Behind the scenes IIS is configured on each selected instance to host the website and feed for RDweb.

Once deployed, you should see the new RDWA instance(s) in the RDS Deployment Properties accessible from the RDS Overview page.

Any Collection made to be visible in RD Web Access will be accessible from any RDWA instance. RDWA instances can be accessed directly via URL, load balanced with DNS or put behind a hardware or software load balancer (F5/ Netscaler).

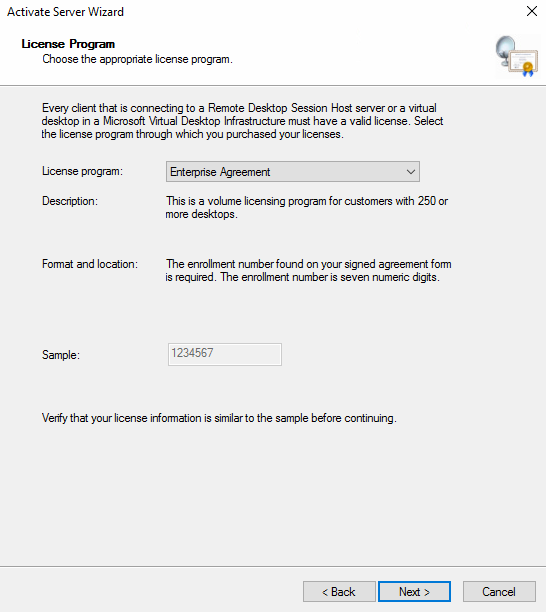

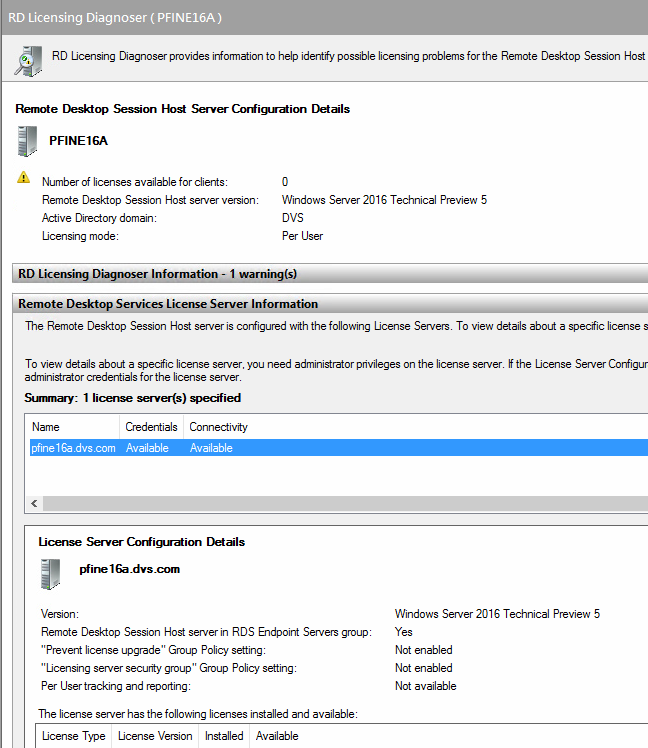

RD Licensing

RD Licensing is one of the trickier roles to make HA as there is no easily exploitable native method to accomplish this. This is generally true regardless of broker solution selected in this space. There are a couple viable methods that require manual intervention that can be used to protect the RD License role. The first method requires two VMs each configured with the RD Licensing role, hosted on separate physical hosts. The first instance has the purchased licenses installed and validated by the Microsoft Clearing House. The second VM is configured with temporary licenses. Both instances are configured via GPO for users but the server with the validated licenses is on the top of the list. Should the primary fail, users can still connect to the environment using temporary licenses until the primary is back online.

The other method also involves two VMs. The primary VM is configured with purchased licenses installed and validated by the Microsoft Clearing House. The VM is cloned, shut down and moved to a separate physical host. Should the primary instance fail for whatever reason, the cold standby can then be powered on to resume the role of the primary. The caveat to this method is that if anything changes from a licensing perspective, the copy to cold stand-by clone process needs to be repeated.

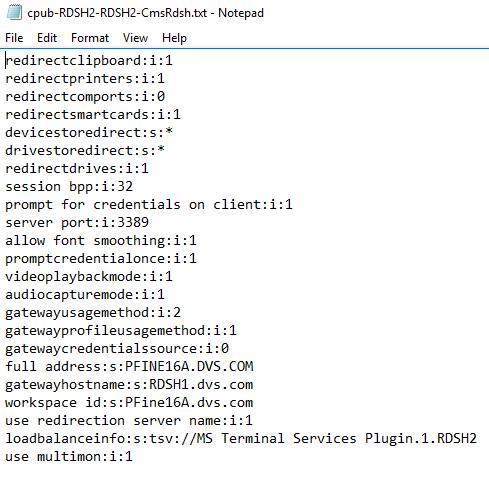

RD Gateway

To optimize and secure connections to the RDS farm from untrusted locations (the interwebs), RDGW can be used and made HA. RDGW terminates SSL for remotely connecting clients, one tunnel for incoming data one for outgoing. UDP can also be utilized for optimized transport of data over WANs using the HTTP transport. RDGW is installed like any other RDS role and includes IIS as a requisite part of the install. RD Gateway Manager is used to manage the configuration and policies of the gateway including SSL certs and transport settings that provide the ability to change HTTP/UDP listeners. RDGW can also use RD Connection Authorization Policies (RD-CAP) which can be stored locally on the RDGW installed or managed centrally on an NPS server. RDGW can be load balanced as a regular HTTP web service including the offloading of SSL termination. DNS Round Robin is not supported and cannot be used in this scenario.

Failover Clustering and RDS

Lastly, a quick point on the role of Failover Clustering in RDS environments. Failover Clustering is recommended to provide HA for the Hyper-V environment and component level protection of the RDS deployment. Should a node fail or require maintenance in a Failover Cluster, it’s VMs will be restarted or evacuated to another node with available capacity. RDS is cluster aware in that it remains aware of the location of VMs within a Failover Cluster, including if they move around, but it does not integrate directly nor make direct use of the Failover Cluster. In this context the resources for the VMs themselves can be protected giving the management VMs a place to migrate or restart should a failure occur. Any storage added directly to the cluster should be converted to a Cluster Shared Volume enabling multiple simultaneous writers to each volume. RDS itself doesn’t care what the underlying storage is nor whether the environment is clustered or not. Remember that any provisioning activities you perform will address RDVH hosts directly with the RDCB providing the ability to select the number of VMs deployed on each host.

Part 1: The Basics

Part 2: RDVH

Part 3: RDSH

Part 4: Scaling and HA (you are here)

Resources

Creating Service Templates for RDS in SCVMM

Deploying multiple VMs from a template in SCVMM (PoSH)

Hyper-V Dynamic MAC addressing for RDS

![image[27] image[27]](https://lh3.googleusercontent.com/-Oq1wjw4dZJs/V0MbMo00_gI/AAAAAAAAKIE/LIL5yFMUFo8/image27_thumb.png?imgmax=800)

![image[27] image[27]](https://lh3.googleusercontent.com/-iyUkLk6AFVw/V0Ma5tWmHXI/AAAAAAAAKHI/wS4MR0NAMQY/image27_thumb.png?imgmax=800)

![image[20] image[20]](https://lh3.googleusercontent.com/-Y7E9r0yyY9A/V09YyfmttaI/AAAAAAAAKaM/Q5SjTOgfZ9Q/image20_thumb%25255B1%25255D.png?imgmax=800)

![image[2] image[2]](https://lh3.googleusercontent.com/-rl9ko6wVaG4/V09YzwL9GKI/AAAAAAAAKaU/14-Zru4JoxE/image2_thumb%25255B1%25255D.png?imgmax=800)

![image[17] image[17]](https://lh3.googleusercontent.com/-Rm41V0N7xi8/V0-wj0pC6QI/AAAAAAAAKbs/INGB04eAngA/image17_thumb%25255B1%25255D.png?imgmax=800)