VDI is one of the most intensive workloads in the datacenter today and by nature uses every major component of the enterprise technology stack: networking, servers, virtualization, storage, load balancing. No stone is left unturned when it comes to enterprise VDI. Physical desktop management can also be an arduous task with large infrastructure requirements of its own. The sheer complexity of VDI drives a lot of interesting and feverish innovation in this space but also drives a general adoption reluctance for some who fear the shift too burdensome for their existing teams and datacenters. The value proposition Unidesk 2.0 brings to the table is a simplification of the virtual desktops themselves, simplified management of the brokers that support them, and comprehensive application management .

The Unidesk solution plugs seamlessly into a new or existing VDI environment and is comprised of the following key components:

- Management virtual appliance

- Master CachePoint

- Secondary CachePoints

- Installation Machine

Solution Architecture

At its core, Unidesk is a VDI management solution that does some very interesting things under the covers. Unidesk requires vSphere at the moment but can manage VMware View, Citrix XenDesktop, Dell Quest vWorkspace, or Microsoft RDS. You could even manage each type of environment from a single Unidesk management console if you had the need or proclivity. Unidesk is not a VDI broker in and of itself, so that piece of the puzzle is very much required in the overall architecture. The Unidesk solution works from the concept of layering, which is increasingly becoming a hotter topic as both Citrix and VMware add native layering technologies to their software stacks. I’ll touch on those later. Unidesk works by creating, maintaining, and compositing numerous layers to create VMs that can share common items like base OS and IT applications, while providing the ability to persist user data including user installed applications, if desired. Each layer is stored and maintained as a discrete VMDK and can be assigned to any VM created within the environment. Application or OS layers can be patched independently and refreshed to a user VM. Because of Unidesk’s layering technology, customers needing persistent desktops can take advantage of capacity savings over traditional methods of persistence. A persistent desktop in Unidesk consumes, on average, a similar disk footprint to what a non-persistent desktop would typically consume.

CachePoints (CP) are virtual appliances that are responsible for the heavy lifting in the layering process. Currently there are two distinct types of CachPoints: Master and Secondary. The Master CP is the first to be provisioned during the setup process and maintains the primary copy of all layers in the environment. Master CPs replicate the pertinent layers to Secondary CPs who have the task of actually combining layers to build the individual VMs, a process called Compositing. Due to the role played by each CP type, the Secondary CPs will need to live on the Compute hosts with the VMs they create. Local or Shared Tier 1 solution models can be supported here, but the Secondary CPs will need to be able to the “CachePoint and Layers” volume at a minimum.

The Management Appliance is another virtual machine that comes with the solution to manage the environment and individual components. This appliance provides a web interface used to manage the CPs, layers, images, as well as connections to the various VDI brokers you need to interface with. Using the Unidesk management console you can easily manage an entire VDI environment almost completely ignoring vCenter and the individual broker management GUIs. There are no additional infrastructure requirements for Unidesk specifically outside of what is required for the VDI broker solution itself.

Installation Machines are provided by Unidesk to capture application layers and make them available for assignment to any VM in the solution. This process is very simple and intuitive requiring only that a given application is installed within a regular VM. The management framework is then able to isolate the application and create it as an assignable layer (VMDK). Many of the problems traditionally experienced using other application virtualization methods are overcome here. OS and application layers can be updated independently and distributed to existing desktop VMs.

Here is an exploded and descriptive view of the overall solution architecture summarizing the points above:

Storage Architecture

The Unidesk solution is able to leverage three distinct storage tiers to house the key volumes: Boot Images, CachePoint and Layers, and Archive.

- Boot Images – Contains images having very small footprints and consist of a kernel and pagefile used for booting a VM. These images are stored as VMDKs, like all other layers, and can be easily recreated if need be. This tier does not require high performance disk.

- CachePoint and Layers – This tier stores all OS, application, and personalization layers. Of the three tiers, this one sees the most IO so if you have high performance disk available, use it with this tier.

- Archive – This tier is used for layer backup including personalization. Repairs and restored layers can be pulled from the archive and placed into the CachePoint and Layers volume for re-deployment, if need be. This tier does not require high performance disk.

The Master CP stores layers in the following folder structure, each layer organized and stored as a VMDK.

Installation and Configuration

New in Unidesk 2.x is the ability to execute a completely scripted installation. You’ll need to decide ahead of time what IPs and names you want to use for the Unidesk management components as these are defined during setup. This portion of the install is rather lengthy to it’s best to have things squared away before you begin. Once the environment variables are defined, the setup script takes over and builds the environment according to your design.

Once setup has finished, the Management appliance and Master CP will be ready, so you can log into the mgmt console to take the configuration further. Of the initial key activities to complete will be setting up an Active Directory junction point and connecting Unidesk to your VDI broker. Unidesk should already be talking to your vCenter server at this point.

Your broker mgmt server will need to have the Unidesk Integration Agent installed which you should find in the bundle downloaded with the install. This agent listens on TCP 390 and will connect the Unidesk management server to the broker. Once this agent is installed on the VMware View Connection Server or Citrix Desktop Delivery Controller, you can point the Unidesk management configuration at it. Once synchronized all pool information will be visible from the Unidesk console.

A very neat feature of Unidesk is that you can build many AD junction points from different forests if necessary. These junction points will allow Unidesk to interact with AD and provide the ability to create machine accounts within the domains.

Desktop VM and Application Management

Once Unidesk can talk to your vSphere and VDI environments, you can get started building OS layers which will serve as your gold images for the desktops you create. A killer feature of the Unidesk solution is that you only need a single gold image per OS type even for numerous VDI brokers. Because the broker agents can be layered and deployed as needed, you can reuse a single image across disparate View and XenDesktop environments, for example. Setting up an OS layer simply points Unidesk at an existing gold image VM in vCenter and makes it consumable for subsequent provisioning.

Once successfully created, you will see your OS layers available and marked as deployable.

Before you can install and deploy applications, you will need to deploy a Unidesk Installation Machine which is done quite simply from the System page. You should create an Installation Machine for each type of desktop OS in your environment.

Once the Installation Machine is ready, creating layers is easy. From the Layers page, simply select “Create Layer,” fill in the details, choose the OS layer you’ll be using along with the Installation machine and any prerequisite layers.

To finish the process, you’ll need to log into the Installation Machine, perform the install, then tell the Unidesk management console when you’re finished and the layer will be deployable to any VM.

Desktops can now be created as either persistent of non-persistent. You can deploy to already existing pools or if you need a new persistent pool created, Unidesk will take care of it. Choose the type of OS template to deploy (XP or Win7), select the connected broker to which you want to deploy the desktops, choose an existing pool or create a new one, and select the number of desktops to create.

Next select the CachePoint that will deploy the new desktops along with the network they need to connect to and the desktop type.

Select the OS layer that should be assigned to the new desktops.

Select the application layers you wish to assign to this desktop group. All your layers will be visible here.

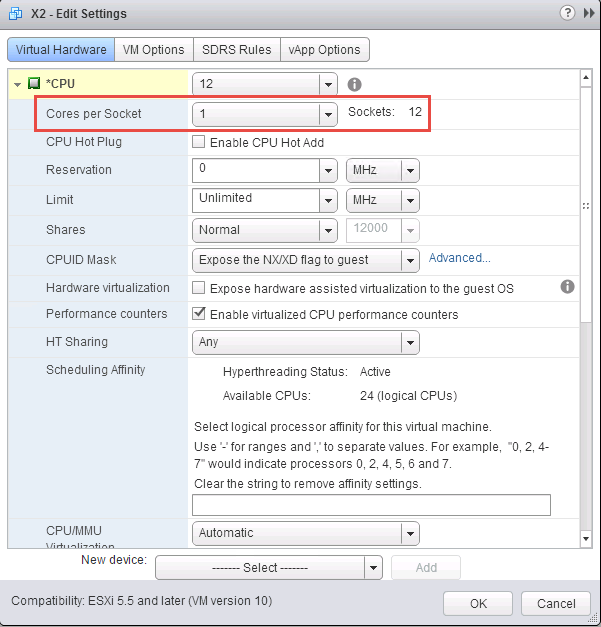

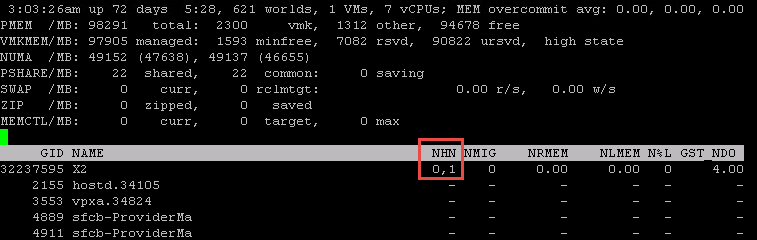

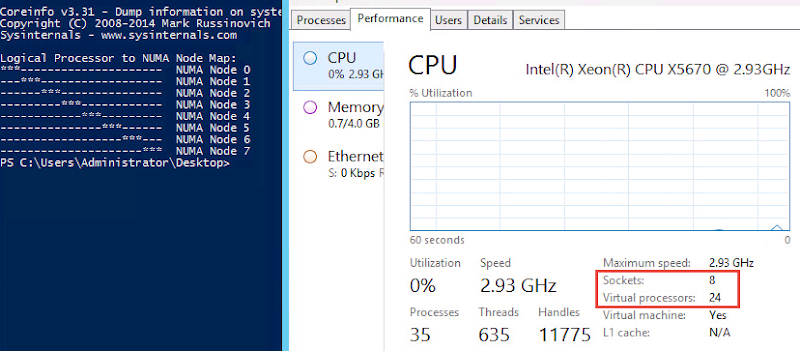

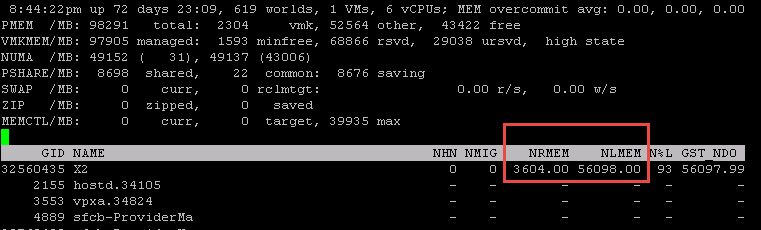

Choose the virtual hardware, performance characteristics and backup frequency (Unidesk Archive) of the desktop group you are deploying.

Select an existing or create a new maintenance schedule that defines when layers can be updated within this desktop group.

Deploy the desktops.

Once the creation process is underway, the activity will be reflected under the Desktops page as well as in vCenter tasks. When completed all desktops will be visible and can be managed entirely from the Unidesk console.

Sample Architecture

Below are some possible designs that can be used to deploy Unidesk into a Local or Shared Tier 1 VDI solution model. For Local Tier 1, both the Compute and Management hosts will need access to shared storage, even though VDI sessions will be hosted locally on the Compute hosts. 1Gb PowerConnect or Force10 switches can be used in the Network layer for LAN and iSCSI. The Unidesk boot images should be stored locally on the Compute hosts along with the Secondary CachePoints that will host the sessions on that host. All of the typical VDI management components will still be hosted on the Mgmt layer hosts along with the additional Unidesk management components. Since the Mgmt hosts connect to and run their VMs from shared storage, all of the additional Unidesk volumes should be created on shared storage. Recoverability is achieved primarily in this model through use of the Unidesk Archive function. Any failed Compute host VDI session information can be recreated from the Archive on a surviving host.

Here is a view of the server network and storage architecture with some of the solution components broken out:

For Shared Tier 1 the layout is slightly different. The VDI sessions and “CachePoint and Layers” volumes must live together on Tier 1 storage while all other volumes can live on Tier 2. You could combine the two tiers for smaller deployments, perhaps, but your mileage will vary. Blades are also an option here, of course. All normal vSphere HA options apply here with the Unidesk Archive function bolstering the protection of the environment.

Unidesk vs. the Competition

Both Citrix and VMware have native solutions available for management, application virtualization, and persistence so you will have to decide if Unidesk if worth the price of admission. On the View side, if you buy a Premier license, you get ThinApp for applications, Composer for non-persistent linked clones, and soon the technology from VMware’s recent Wanova acquisition will be available. The native View persistence story isn’t great at the moment, but Wanova Mirage will change that when made available. Mirage will add a few layers to the mix including OS, apps, and persistent data but will not be as granular as the multi-layer Unidesk solution. The Wanova tech notwithstanding, you should be able to buy a cheaper/ lower level View license as with Unidesk you will need neither ThinApp nor Composer. Unidesk’s application layering is superior to ThinApp, with little in the way of applications that cannot be layered, and can provide persistent or non-persistent desktops with almost the same footprint on disk. Add to that the Unidesk single management pane for both applications and desktops, and there is a compelling value to be considered.

On the Citrix side, if you buy an Enterprise license, you get XenApp for application virtualization, Provisioning Services (PVS) and Personal vDisk (PVD) for persistence from the recent RingCube acquisition. With XenDesktop you can leverage Machine Creation Services (MCS) or PVS for either persistent or non-persistent desktops. MCS is deadly simple while PVS is incredibly powerful but an extraordinary pain to set up and configure. XenApp builds on top of Microsoft’s RDS infrastructure and requires additional components of its own such as SQL Server. PVD can be deployed with either catalog type, PVS or MCS, and adds a layer of persistence for user data and user installed applications. While PVD provides only a single layer, that may be more than suitable for any number of customers. The overall Citrix solution is time tested and works well although the underlying infrastructure requirements are numerous and expensive. XenApp offloads application execution from the XenDesktop sessions which will in turn drive greater overall host densities. Adding Unidesk to a Citrix stack again affords a customer to buy in at a lower licensing level, although Citrix is seemingly removing value for augmenting its software stack by including more at lower license levels. For instance, PVD and PVS are available at all licensing levels now. The big upsell now is for the inclusion of XenApp. Unidesk removes the need for MCS, PVS, PVD, and XenApp so you will have to ask yourself if the Unidesk approach is preferred to the Citrix method. The net result will certainly be less overall infrastructure required but net licensing costs may very well be a wash.